Projects

My projects and research.

Reinforcement Learning for Quadruped Walking (2025)

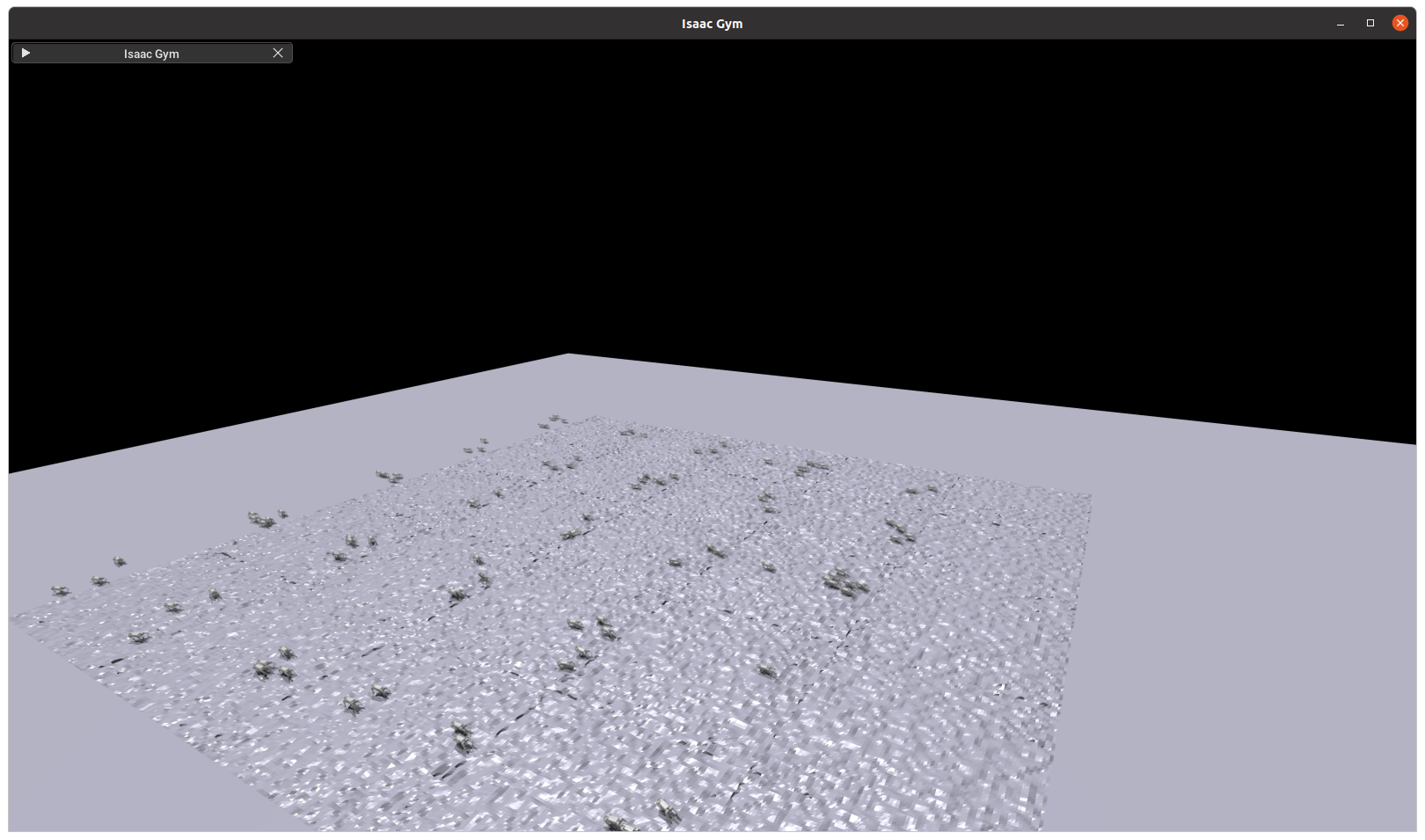

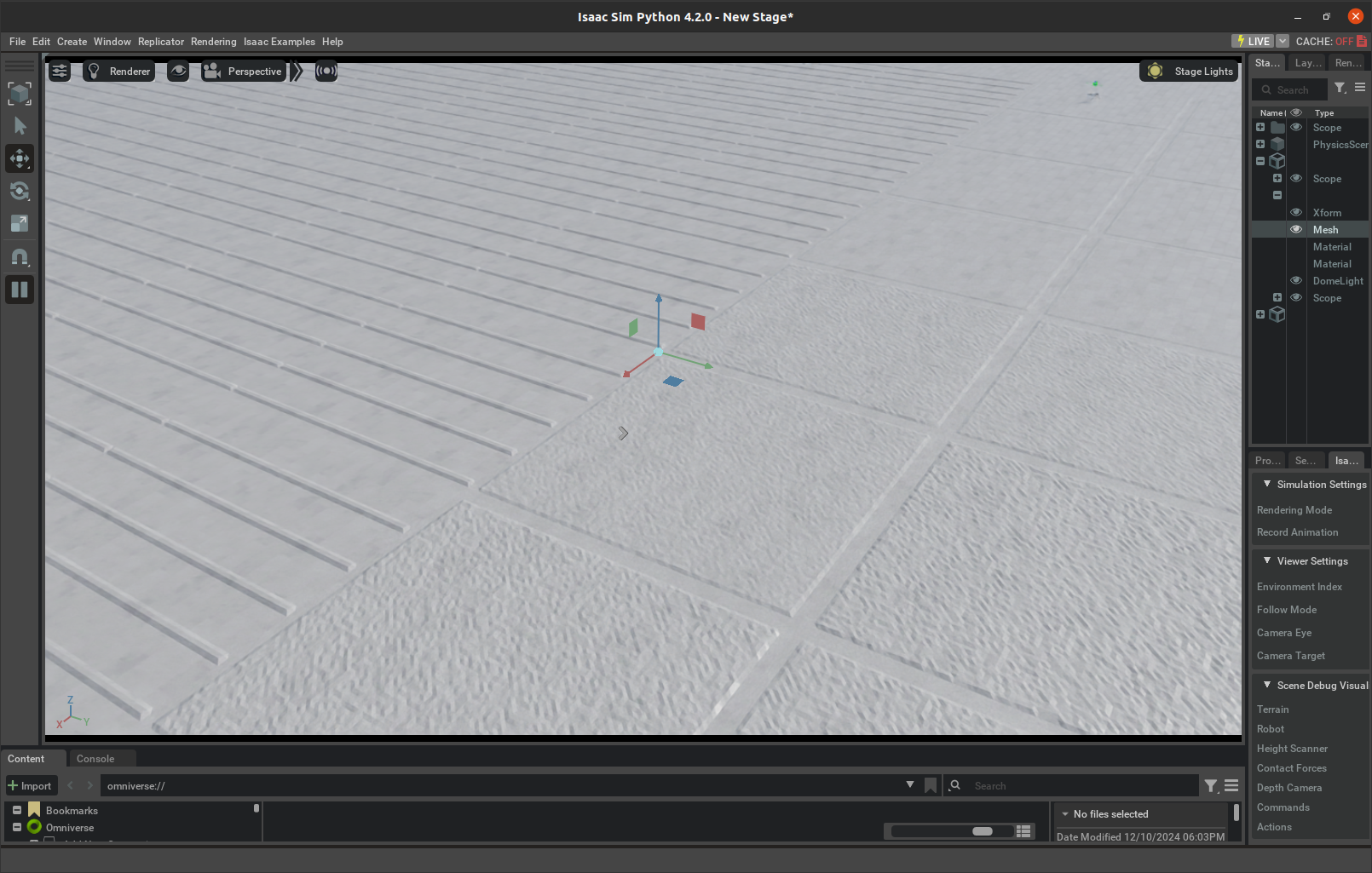

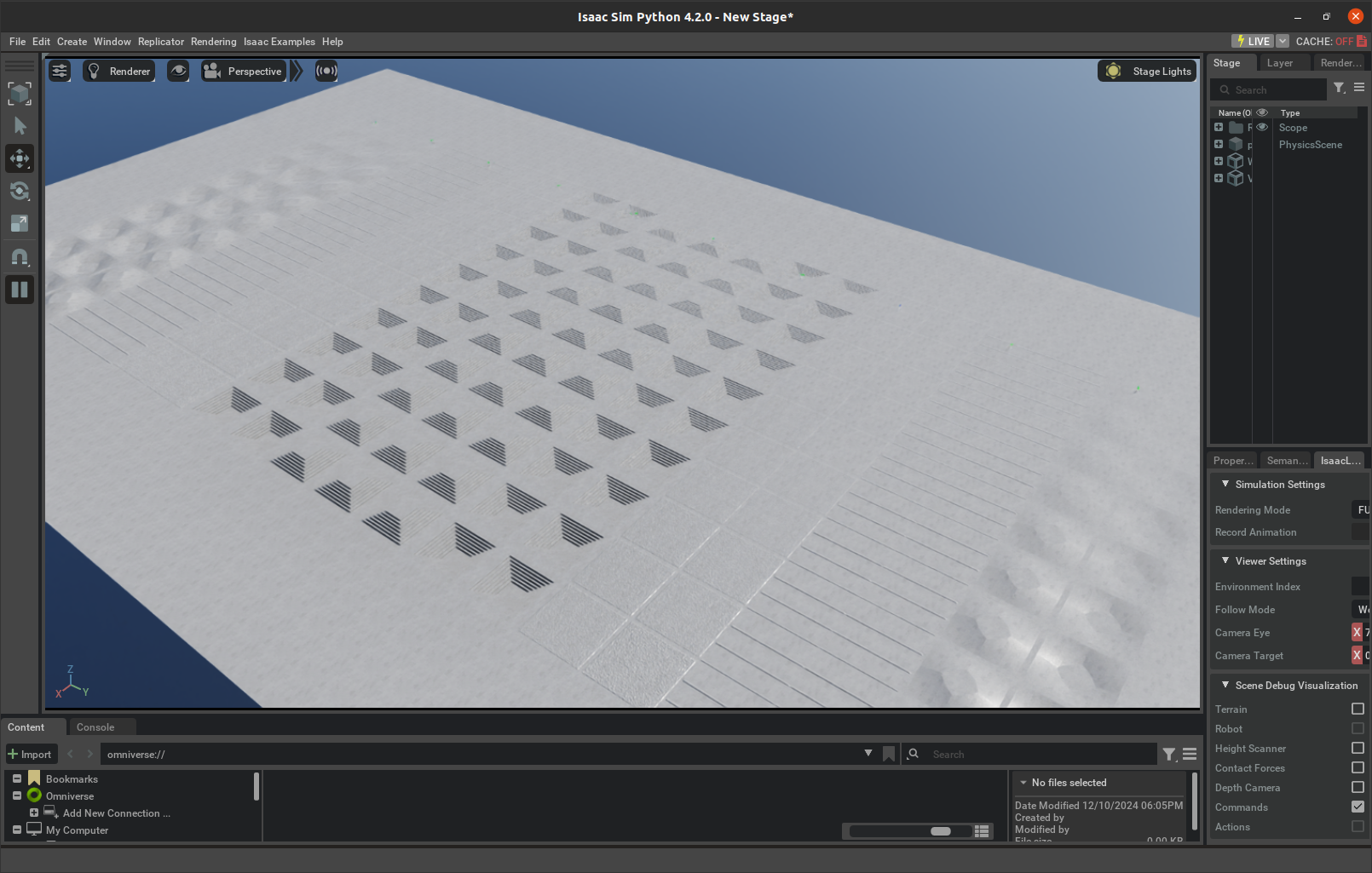

LocomotionTeaser

Stable, symmetric walking in rough terrain (blind policy).

Overview

We study RL policies for elegant quadruped locomotion. By shaping training terrains and tuning rewards to favor long, low strides and consistent body height, we obtain more natural, symmetric gaits across flat and rough terrains. We also explore depth-camera perception to climb stairs and navigate obstacles.

Methodology

IsaacLab simulation with diverse terrains (flat, rough, rails, waves). Reward emphasizes velocity tracking, base height, and smooth actuation to discourage pronking. Trained with many parallel environments on GPU.

Walking Gaits Without Terrain Shaping

Flat-only training often induces pronking and irregular gaits. Terrain structure encourages more natural symmetry.

Final Results

Deployments on Unitree Go1 Edu

Vision‑Based Human‑Following Robot (2025)

PerceptionOverview

Implemented a vision‑based human‑following capability on the Triton mobile robot. Perception is powered by YOLOv5 running on a Jetson Nano with an Intel RealSense D435 RGB‑D camera, and the system supports both teleoperation and autonomous modes. Gesture‑based activation was integrated using MediaPipe to enable hands‑free control.

Methodology

The architecture comprises four modules: Human Recognition, Human Following, Gesture Recognition, and Obstacle Avoidance. On‑board RGB‑D and LiDAR provide target tracking and collision avoidance. An external webcam supplies gesture inputs that are fused into the control state machine for safe mode switching.

Results

- Human recognition: ≥ 85% accuracy under varied lighting and occlusion conditions.

- Human following: up to 1 m/s with ≤ 0.3 m mean distance error.

- Gesture recognition: ≥ 80% classification accuracy with ≤ 1.5 s end‑to‑end latency.

- Obstacle avoidance: ≥ 95% collision‑free trials in store‑like layouts.

Contributions

- Designed and implemented obstacle‑avoidance algorithms with LiDAR fusion and safety checks.

- Built the laptop‑to‑Triton communication bridge for remote control, telemetry, and logging.

- Implemented hand‑gesture recognition and integrated it with the control pipeline for mode selection.

Videos

ROS laptop‑robot connection test.

Hand‑gesture recognition and motion tests.

Plastic Bottle Sorting with 6DOF Arm (2023)

ManipulationEnd‑to‑end vision‑guided sorting of plastic bottles using a 6DOF arm. Real‑time perception, conveyor tracking, and a custom gripper enable high‑accuracy, high‑throughput pick‑and‑place on a moving line.

Results & Impact

- ≈98% detection and sorting accuracy on moving conveyor

- Reduced cycle time via optimized gripper geometry and grasp sequencing

- Robust to lighting/pose variation with real-time instance segmentation

- Higher throughput from stabilized pick-and-place and fewer false rejects

System Overview

Components: real‑time Mask R‑CNN for instance segmentation; belt/encoder synchronization and object tracking; motion planning with grasp sequencing; and a CAD‑designed end‑effector to improve grasp stability and tolerance to bottle variance.

Videos

Arm operation: calibration and sorting cycle (YouTube).

Robot and camera calibration steps (YouTube).

Ball‑Picking Robot (NTUT PBL 2023 - Second Prize)

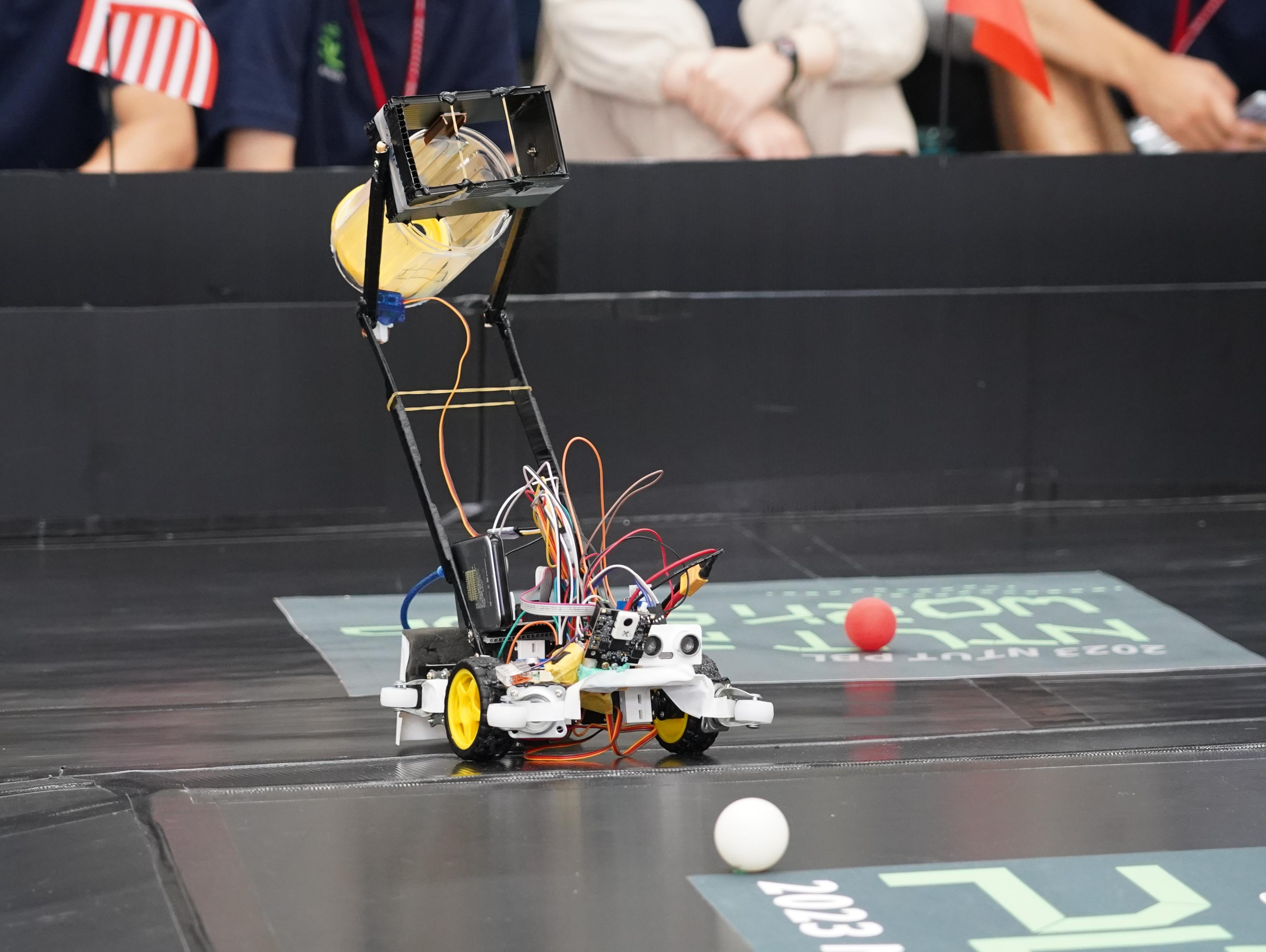

CompetitionOverview

Represented Ho Chi Minh City University of Technology (HCMUT) at the Project‑Based Learning program hosted by National Taipei University of Technology (NTUT). In a 10‑day sprint, our team built a ball‑picking robot and placed second overall.

Technical Highlights

- Designed an OpenCV vision pipeline to robustly detect red balls and reject distractors, improving selection accuracy by >25% vs early prototypes.

- Implemented an embedded C control stack to recognize targets, compute throw trajectories, and actuate the pick‑and‑throw mechanism autonomously.

- Coordinated integration and on‑site testing across a 10‑student team.

Media

Sources: YouTube workshop clip and Taipei Tech Post Issue 61.